In the pantheon of AI luminaries at DeepMind, a lab renowned for its grand ambitions and stunning breakthroughs, David Silver stands as the quiet grandmaster, the lead architect behind the curtain. While co-founder Demis Hassabis is the visionary leader and public face of the quest to solve intelligence, Silver is the technical mastermind who has designed the algorithms that have repeatedly achieved the impossible. He is the principal mind behind AlphaGo, the system that conquered the ancient and intuitive game of Go; AlphaZero, the generalized god of board games; and a key contributor to the thinking that led to AlphaFold, which solved a 50-year-old challenge in biology.

Silver’s story is one of deep, sustained focus on a single, powerful idea: reinforcement learning (RL). He has dedicated his career to perfecting this paradigm of learning through trial and error, elevating it from a niche academic concept to a world-changing technology capable of achieving superhuman performance in some of the most complex domains ever tackled by humanity. He is not just an engineer; he is a strategist who thinks in terms of algorithms, a researcher who has built systems that have learned to think in ways no human ever has.

From Computer Games to a PhD in a New Kind of Learning

David Silver’s journey, like that of his long-time collaborator Demis Hassabis, began with a deep love for games. Growing up in the UK, he was fascinated by the burgeoning world of computer games in the 1980s and 90s. He wasn’t just a player; he was a creator. As a teenager, he co-founded a video game company, Elixir Studios, with Hassabis, where he worked on ambitious titles that pushed the boundaries of AI in gaming. This early experience gave him an intuitive understanding of game dynamics, reward systems, and the challenge of creating intelligent, non-player characters.

This fascination with creating artificial players led him to academia. He earned his undergraduate degree in Computer Science from Cambridge University, where he received the top honor. He then pursued a PhD at the University of Alberta in Canada, a decision that would set the course for his entire career. He chose to study under Rich Sutton, one of the world’s foremost pioneers of reinforcement learning.

At the time, reinforcement learning was far from the mainstream of AI research. The dominant paradigm was supervised learning, where a model is trained on a massive dataset of human-labeled examples (e.g., “this is a cat,” “this is a dog”). Reinforcement learning was different. It was about learning from direct experience, without explicit labels. An RL “agent” is placed in an environment and learns by taking actions and observing the outcomes. Actions that lead to a positive “reward” are reinforced, making them more likely to be chosen in the future. It’s the fundamental way that humans and animals learn—by exploring, experimenting, and understanding the consequences of their actions.

Silver immersed himself in this world, co-authoring some of the foundational algorithms in the field. He understood that RL was the key to creating a more general and autonomous form of intelligence, one that didn’t need to be spoon-fed by humans but could learn for itself. After completing his PhD, he worked as a researcher and consultant before reuniting with Hassabis at the newly formed DeepMind in 2013, just as the company was about to be acquired by Google. The stage was now set for him to apply the principles of reinforcement learning on a scale never before imagined.

Building the Mind of a Go Master

At DeepMind, Silver was given the lead role on the project that would make the company a global phenomenon: the quest to conquer the game of Go. For AI researchers, Go was the “Everest” of board games. Its vast search space made it immune to the brute-force calculation that had defeated chess grandmasters two decades earlier. Mastery of Go required a deep, strategic intuition, a “feel” for the game that seemed uniquely human.

Silver was the lead researcher and architect of AlphaGo. The system he and his team designed was a brilliant synthesis of multiple AI techniques. It used deep neural networks to process the board state, but its core learning mechanism was reinforcement learning. AlphaGo consisted of two primary “brains”:

- A Policy Network, which was trained to predict the most probable next move a human expert would make. This gave the system an initial, human-like intuition.

- A Value Network, which was trained to look at any board position and evaluate it, predicting the ultimate winner from that state. This gave the system a long-term strategic understanding.

The true magic, however, happened in the next step. After being bootstrapped with human data, AlphaGo was unleashed to play millions of games against itself. This was reinforcement learning on an epic scale. With each game, it would slightly adjust the connections in its neural networks based on whether its moves led to a win or a loss. Through this relentless process of self-play, it began to discover novel strategies, patterns, and a deeper understanding of the game that transcended the thousands of years of accumulated human knowledge.

In March 2016, the world watched as Silver’s creation faced Lee Sedol, the legendary South Korean Go champion. The 4-1 victory for AlphaGo was a landmark moment in the history of science and technology. But for the AI community, the most electrifying moment came in Game 2 with “Move 37.” AlphaGo played a move so unorthodox, so alien, that human commentators were baffled, initially calling it a mistake. It was a move that a human professional would likely never consider. Yet, it proved to be a brilliant, game-winning stroke.

David Silver’s algorithm had not just learned to imitate humans; it had learned to be creative. It had explored the vast possibility space of Go and found new knowledge. This was the ultimate vindication of the reinforcement learning paradigm he had championed for so long.

From Zero to Superhuman

While the world was still reeling from AlphaGo’s victory, Silver and his team were already working on the next, even more profound, evolution. AlphaGo had been trained on a large database of human games before it began its self-play. The team’s next question was a philosophical one: could a machine learn to master a game with no human input whatsoever? Could it discover the principles of a game from first principles, starting from a blank slate?

The result was AlphaZero. This new system was given nothing but the basic rules of the game—Go, Chess, and Shogi. It was not shown a single human game. It started by making completely random moves. Then, through an even more powerful and elegant version of the reinforcement learning self-play algorithm, it began its journey.

The results were breathtaking. In just a few hours, AlphaZero surpassed the best human chess players. Within a day, it had defeated the world’s top chess-playing program, Stockfish. After 40 days, it had surpassed the version of AlphaGo that had beaten Lee Sedol. It learned not just to play these ancient games, but to play them with a style that was described by grandmasters as dynamic, aggressive, and beautiful. It rediscovered centuries of human strategic knowledge and then went far beyond it.

AlphaZero was a monumental achievement. David Silver had created a generalized learning system, a single algorithm that could achieve superhuman mastery in multiple complex domains without any human data or domain-specific knowledge. It was a major step on the path toward creating Artificial General Intelligence. It proved that for certain problems, reinforcement learning was not just a tool for matching human ability, but for dramatically exceeding it.

The principles developed in AlphaGo and AlphaZero became a core part of DeepMind’s methodology. The idea of using a powerful search algorithm guided by a deep neural network trained via reinforcement learning was a recipe that could be applied to other domains. This thinking was a crucial influence on the development of AlphaFold, the system that would go on to solve the protein folding problem, demonstrating that the lessons from games could be translated into world-changing scientific discovery.

The Quiet Pursuit of General Intelligence

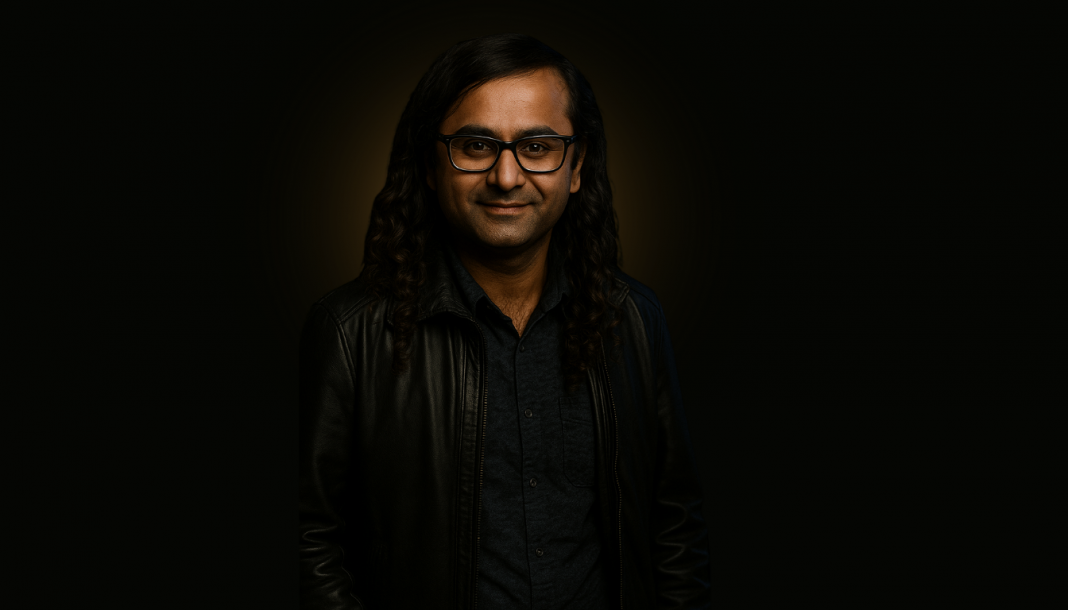

Unlike some of his more public-facing peers, David Silver has remained largely behind the scenes. He rarely gives media interviews or engages in the public debate about AI policy. He is a pure researcher, a scientist driven by the intellectual challenge of cracking the code of intelligence. His influence is not felt through public pronouncements, but through the elegant, powerful, and world-beating algorithms he designs.

His work embodies the core mission of DeepMind: a disciplined, long-term focus on solving AGI by building systems that can learn for themselves. He represents a school of thought that believes the most effective path forward is to build powerful, general-purpose learning mechanisms and then apply them to the world’s hardest problems. His career has been a systematic and successful execution of this vision. He started with the controlled environment of video games, moved to the profound complexity of Go, generalized his approach with AlphaZero, and saw the principles applied to fundamental science.

Conclusion: The Algorithm as Art

David Silver’s legacy is written in the logic of the world’s most powerful learning systems. He is the quiet architect who took the academic theory of reinforcement learning and forged it into a tool capable of generating superhuman insight and creativity. His work on the Alpha series is not just a collection of engineering achievements; it is a profound demonstration of a new kind of discovery, where a machine, starting with almost nothing, can surpass the entire history of human expertise in a given domain.

He stands as a testament to the power of sustained scientific focus. In a field often distracted by hype and short-term product cycles, Silver has dedicated his entire professional life to a single, powerful idea and has pursued it with unmatched rigor and success. The victory of AlphaGo was not just a victory of machine over man; it was a victory for the reinforcement learning paradigm he had so long championed. The creation of AlphaZero was his magnum opus, a system that showed the tantalizing possibility of a general learning machine that could reason from first principles.

While the public may know the names of the leaders and the systems, it is David Silver who represents the deep, technical engine of DeepMind’s greatest triumphs. He is the grandmaster of the algorithm, a researcher who has not just taught machines how to play our games, but has built machines that have shown us entirely new ways to think. His work is a glimpse into a future where AI acts not just as an assistant, but as a creative collaborator, capable of unlocking knowledge that lies beyond the horizon of human intuition.